LLM

In the realm of LLM size refers to the number of trainable parameters that the model has.

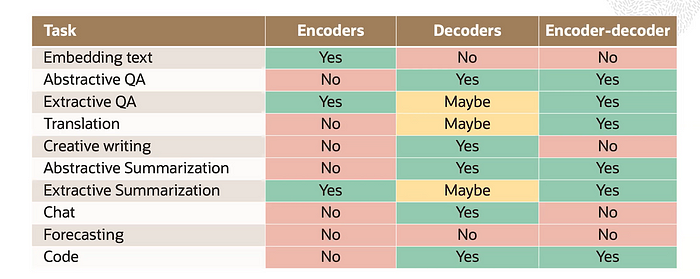

Encoders are designed to embed text that means taking a sequence of words and converting it to vectors

These vector representation are designed to be consumed later by other models to do things like classifiacation regression vector search in database

Decoders these models take a sequence of tokens and emit the next token in the sequence based on the probability of the vocabulary which they compute

Encoder-decoder models have been primarily been utilized for sequence to sequence tasks like translation